Navigating the New Frontier of Cyber Fraud in the Age of Deepfakes

Written on

Chapter 1: Understanding Deepfakes and Cyber Fraud

Welcome to a transformative period in cyber-fraud, driven by the emergence of deepfake technology. The potential ramifications of deepfakes on cyber fraud are significant, as they empower malicious actors in new and alarming ways.

Imagine interviewing a candidate for a critical database administrator role over a video conferencing platform like Zoom. Let's say this candidate is named John. His credentials appear solid, and he successfully clears all reference checks. Given the sensitive nature of the data he would handle, his qualifications seem impressive, making him an ideal fit for the role—until you discover that the individual you interviewed was actually a cybercriminal, utilizing deepfake technology to impersonate John.

Rather than breaching your organization’s defenses through traditional hacking methods, this individual gains access by masquerading as the legitimate candidate. The misuse of Artificial Intelligence (AI) to orchestrate such attacks is a growing concern, especially as AI technologies become more widely adopted.

Cybercriminals are increasingly innovative, perpetually seeking new methods to exploit vulnerabilities in corporate defense systems, and deepfakes present a promising avenue for their schemes.

Deepfakes are defined as:

Synthetic media in which a person in an existing image or video is swapped with another individual's likeness. Although creating fake content isn't a novel concept, deepfakes utilize advanced techniques from machine learning and AI to produce visual and audio content that can easily mislead viewers.

For years, individuals have experimented with deepfake technology, superimposing the faces of celebrities and politicians onto their videos with striking results.

However, the implications of deepfakes extend beyond mere entertainment; they can severely undermine trust if misused. People are generally inclined to believe visual content, especially when it comes from authoritative figures. Thus, the potential for deepfake technology to propagate misinformation is considerable.

Section 1.1: The Threat to Remote Employment

As remote and hybrid work environments become the norm, the method of conducting interviews has shifted online. Unfortunately, this transition hasn't gone unnoticed by cybercriminals.

On June 28, 2022, the FBI’s Internet Crime Complaint Center (IC3) issued an alert about the rising trend of criminals employing deepfakes to apply for sensitive remote positions that would grant them access to personally identifiable information (PII).

The positions targeted include roles like programming and database administration. Attackers often leverage stolen PII to make their applications more credible, including using fake identities to pass background checks without detection.

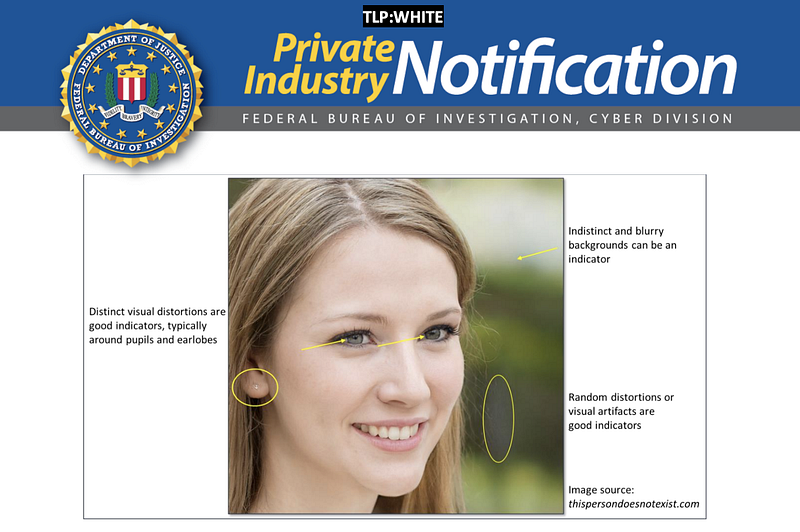

Companies typically spot red flags when they notice discrepancies, such as mismatched lip movements during interviews or other auditory inconsistencies. However, as AI technology continues to evolve, these issues may become increasingly trivial for cybercriminals to overcome.

The FBI also released a notification highlighting a new type of threat known as Business Identity Compromise (BIC). This emerging attack vector presents a significant evolution from Business Email Compromise (BEC) by utilizing advanced content generation tools to create synthetic corporate personas or convincingly mimic existing employees.

Chapter 2: Countering Deepfake Threats with AI

As the landscape of cyber threats continues to evolve, organizations must adapt their defenses accordingly. One effective strategy is to embrace AI and machine learning technologies for authentication purposes.

Traditional methods, such as a simple video call, may no longer suffice for sensitive positions. Companies should invest in machine learning solutions that incorporate automation and "liveness detection" to identify attempts by attackers posing as legitimate candidates.

In addition to AI implementation, organizations should adopt the following best practices:

- Assess Risks: Evaluate current procedures for conducting interviews for sensitive roles and explore alternative authentication methods to verify applicants' identities.

- Promote Awareness: Integrate deepfake awareness into your cybersecurity program to ensure that all employees, especially in HR, understand the associated risks. Training should cover how to recognize deepfakes and the common visual inconsistencies that can indicate manipulation.

- Revise Incident Response Plans: Update your incident response processes to include scenarios involving deepfake impersonation, ensuring that legal and media teams are prepared to respond.

As we navigate this uncharted territory of AI-driven threats, it’s imperative for organizations and their cybersecurity teams to bolster their defenses. The era of simple email-based social engineering appears to be fading, and organizations must evolve or risk falling behind in the face of sophisticated AI attacks.

Good luck on your journey through the world of AI!

For more insights on this topic, consider checking out my discounted course on AI governance and cybersecurity, which discusses emerging AI risks and strategies for mitigation.

The first video, "The Future of Fraud: Understanding Deepfake Scams and Building Resilience in the Face of AI," explores the evolving landscape of cyber fraud and the role deepfakes play in it.

The second video, "McAfee Launches AI-Powered Deepfake Detector," showcases advancements in technology aimed at combatting the threats posed by deepfakes.