N-BEATS: A Novel Approach to Time-Series Forecasting

Written on

In the realm of data science, time-series forecasting holds a unique position. It is one of the few areas where deep learning and transformer models have not consistently eclipsed traditional approaches. The Makridakis M-competitions provide an excellent benchmark for assessing progress in this field, with the fourth edition, known as M4, serving as a prime example.

During the M4 competition, the top-performing entry was the ES-RNN model, a hybrid of LSTM and exponential smoothing developed by Uber. In contrast, only six out of fifty-seven pure machine learning models showed any notable improvement over the baseline, underscoring the challenges faced by more advanced techniques.

However, the landscape shifted dramatically with the introduction of N-BEATS by Elemental AI, co-founded by Yoshua Bengio. This deep learning model not only surpassed the ES-RNN by 3% but also brought forth several groundbreaking features.

This article delves into the following aspects of N-BEATS:

- The architecture and functionality of N-BEATS, highlighting its strengths.

- The model's ability to produce interpretable forecasts.

- N-BEATS's capabilities in zero-shot transfer learning.

- The limitations of ARIMA in supporting transfer learning.

Let’s explore these topics in greater detail.

Understanding N-BEATS

N-BEATS is a rapid and interpretable deep learning model designed to mimic statistical models via dual residual stacks of fully connected layers. It stands for Neural Basis Expansion Analysis for Time Series and represents a significant advancement created by ElementAI, which was later acquired by ServiceNow.

The model is noteworthy for several reasons:

- It is the first pure deep learning framework to surpass traditional statistical methods.

- It delivers interpretable forecasts.

- It lays the groundwork for transfer learning in time-series analysis.

Around this time, Amazon introduced its own time-series model, DeepAR, which incorporated deep learning techniques alongside statistical concepts like maximum likelihood estimation.

Key Features of N-BEATS

Here are some prominent characteristics of N-BEATS:

Support for multiple time series: N-BEATS can be trained on various time series, each reflecting different statistical distributions.

Rapid training: The absence of recurrent or self-attention layers results in quicker training and stable gradients.

Multi-horizon forecasting: The model is capable of producing predictions for multiple future time points.

Interpretability: Two versions of the model exist: a generic version and an interpretable version that can provide insights into trends and seasonal patterns.

Zero-shot transfer learning: N-BEATS can effectively apply knowledge from one time series to another, achieving impressive results.

The original implementation of N-BEATS by ElementAI is designed for univariate time-series only. However, the Darts library has released a version that accommodates multivariate time series and probabilistic outputs. This article focuses on the original iteration.

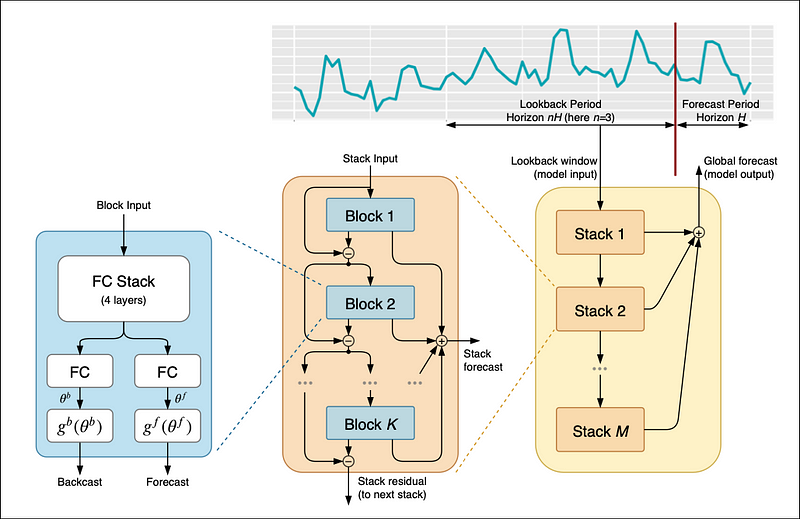

N-BEATS Architecture Overview

The architecture of N-BEATS is straightforward yet deep.

Key components include:

- Block (blue): The fundamental processing unit.

- Stack (orange): A collection of blocks.

- Final model (yellow): A collection of stacks.

Each layer in the network is simply a dense layer.

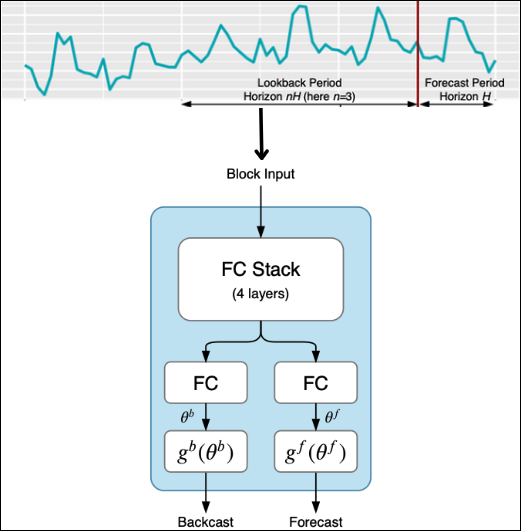

The Basic Block

In N-BEATS, if we denote the forecasting horizon as H, the lookback window is a multiple of H.

Inside the basic block, the model looks back over three days (72 hours) to predict power consumption for the subsequent 24 hours. The input undergoes processing through a four-layer neural network, producing two outputs.

The resulting projections are transformed into backcast and forecast signals through a process known as neural basis expansion.

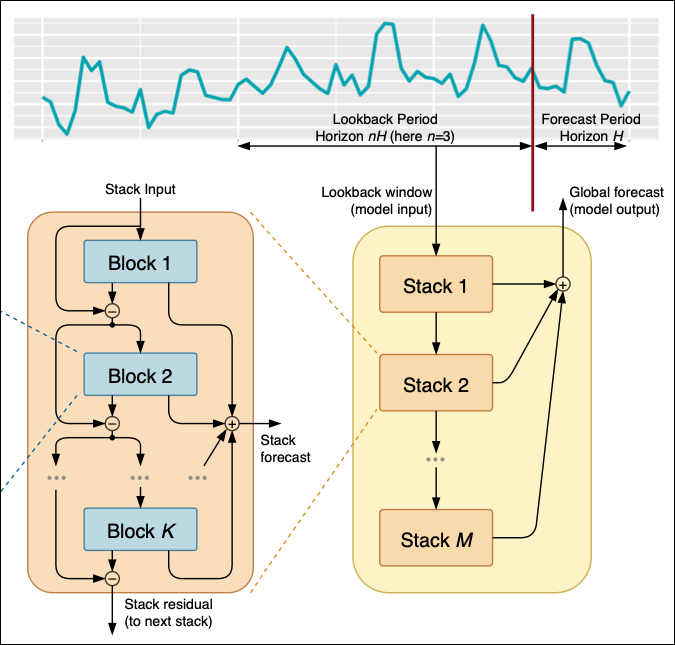

The Stack Structure

To enhance the neural expansion process, multiple blocks are stacked together.

The initial block receives the original input, while subsequent blocks utilize the backcast signal from the preceding block. Inside each stack, backcast and forecast signals are organized into two branches, implementing a doubly residual stacking approach.

Relation to ARIMA

For those familiar with ARIMA, N-BEATS may appear similar in certain aspects. ARIMA, based on the Box-Jenkins methodology, involves an iterative process for parameter estimation and model verification.

In contrast, N-BEATS utilizes a learnable parameter estimation strategy that allows it to excel across various time sequences, bypassing the need for manual parameter adjustment.

Interpretable Architecture of N-BEATS

The N-BEATS model can be made interpretable through several modifications:

- Utilizing just two stacks focused on trend and seasonality.

- Each stack comprises three blocks, as opposed to one per stack in the generic model.

- The basis layer weights are shared at the stack level.

Experimental Evaluation

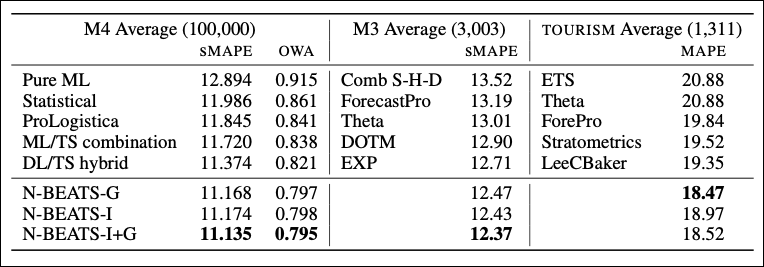

The authors assessed N-BEATS's performance across three prominent datasets—M3, M4, and Tourism.

#### Results Overview

The results illustrate that N-BEATS consistently outperforms other models, with the ensemble of N-BEATS-G and N-BEATS-I achieving the best outcomes.

Notably, N-BEATS does not necessitate manual feature engineering or input scaling, making it significantly easier to apply across various tasks.

Zero-shot Transfer Learning

N-BEATS's standout feature is its capacity for zero-shot transfer learning, allowing it to make predictions on unseen datasets without prior training.

Conclusion

N-BEATS represents a significant advancement in deep learning for time-series forecasting, establishing a robust framework for zero-shot transfer learning. This positions it as a pioneering model within the field.

In future articles, we will provide programming tutorials related to N-BEATS and review newer developments such as N-HiTS.

Thank you for reading! I publish an in-depth analysis of influential AI papers monthly.

- Subscribe to my newsletter!

- Follow me on LinkedIn!

References

[1] Created from DALLE with the text prompt “a neon sinusoidal signal transmitting through space, concept art”. [2] Slawek Smyl et al. M4 Forecasting Competition: Introducing a New Hybrid ES-RNN Model [3] Boris O. et al. N-BEATS: Neural Basis Expansion Analysis For Interpretable Time Series Forecasting, ICLR (2020) [4] D. Salinas et al. DeepAR: Probabilistic forecasting with autoregressive recurrent networks, International Journal of Forecasting (2019). [5] ElectricityLoadDiagrams20112014 dataset by UCI, CC BY 4.0. [6] M3 Dataset: https://forecasters.org/resources/time-series-data/, Public domain [7] M4 Dataset: https://forecasters.org/resources/time-series-data/, Public domain [8] Tourism Dataset: https://www.kaggle.com/c/tourism1, Public domain [9] Alec Radford et al. Learning Transferable Visual Models From Natural Language Supervision (Feb 2021) [10] Alec Radford et al. Robust Speech Recognition via Large-Scale Weak Supervision (September 2022) [11] Yoshua Bengio et al. Learning a synaptic learning rule [12] Boris O. et al. Meta-learning framework with applications to zero-shot time-series forecasting