How I Developed a Tool to Enhance Product Documentation Using GPT

Written on

Chapter 1: Introduction to the Tool

As a product manager, I have extensively utilized ChatGPT to boost my productivity and seamlessly integrate it into my projects. This powerful tool has been invaluable for various tasks, from conducting preliminary research to evaluating user feedback. One of the standout applications has been enhancing my product documentation, which serves to clarify product logic and facilitate communication with my colleagues.

However, I often found myself repeatedly typing the same prompts, particularly when longer, more refined prompts were necessary for quality responses. Additionally, those unfamiliar with prompt engineering faced challenges in crafting well-structured queries that would yield the desired results from GPT. To address these issues, I developed a straightforward open-source tool called PM Doc Interrogator (Link; Source Code [1]), which harnesses GPT's capabilities to automatically enhance product requirement documents with just a few clicks.

The icon for PM Doc Interrogator, created using Stable Diffusion. This project is designed to help product managers save time on prompt writing while deepening my understanding of the GPT API and developing applications powered by large language models (LLMs). In this article, I will outline the design and development journey of this tool and share the lessons learned from creating an LLM-based application.

Chapter 2: Defining the Product

Establishing a clear scope and framework is essential in product management, even for a minor project. For the PM Doc Interrogator, my goal was to develop a tool aimed at Product Managers seeking to enhance the thoroughness and clarity of their documents. I identified two specific user groups with distinct challenges: individuals who do not want to retype lengthy prompts and those who lack expertise in crafting high-quality prompts.

The PM Doc Interrogator serves as a user-friendly interface that allows PMs to review their documents and receive suggestions for improvement. Users can upload their documents and query them through GPT using pre-defined prompts. The unique aspect of this tool is its tailored prompts for various scenarios, specifically designed for product management documentation, making it straightforward to use.

Chapter 3: Tool Design and Implementation

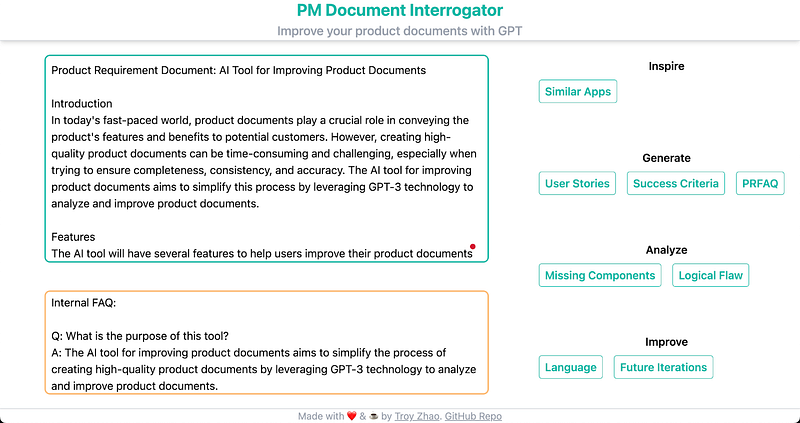

To streamline the project, I opted for a minimalistic interface, incorporating only the essential components: a text area for the original document, a feedback section, and a button panel for submitting requests to GPT.

Users can paste their documents into the designated area and select a button from the panel on the right, each corresponding to a specific scenario related to product documentation.

From my experience in drafting product documents and observing other PMs, I pinpointed several common scenarios where GPT could prove beneficial:

- Inspire: Finding similar applications for inspiration.

- Generate: Producing user stories, success criteria, or PRFAQs based on a draft document.

- Analyze: Identifying missing components that need addressing in a product requirement document (PRD) and spotting logical inconsistencies.

- Improve: Enhancing language for clarity and formality, and brainstorming potential future iterations for the product.

For each scenario, I crafted a specific prompt that included the original document and clearly directed GPT on the task at hand. This dynamically constructed prompt applies various prompt engineering techniques to ensure consistent, well-structured feedback without deviation or inaccuracies.

Here’s an example of a prompt for creating a PRFAQ:

Given the following product requirement document, write a PRFAQ in Amazon style. The document should contain a short press release of the product, an internal FAQ for addressing questions from stakeholders, and an external FAQ for customers and other external parties. Be comprehensive, logical, and concise. - -START OF DOCUMENT - - + document_text + "- -END OF DOCUMENT - -";

For the tool's minimum viable product (MVP), I created a front-end-only version due to time limitations, requiring users to input their own API key to send requests directly to OpenAI’s API from their browser. The results are streamed back to the front end for display in the feedback section. I also designed a favicon using Stable Diffusion, as I lacked design skills for creating an icon.

Chapter 4: Insights from Development

Throughout the development of this tool, I encountered several challenges worth noting for anyone considering building a similar application.

One significant issue is context size. The GPT API has a limited context size (4000 tokens for GPT-3.5 [3]), which can complicate the handling of very lengthy documents. Due to time constraints, I set a document size limit of around 2000 words. However, potential solutions for future versions include: (1) For detailed prompts targeting specific sections, using chunking and querying to split the document and only pass relevant sections to the API; (2) For broader prompts like overall structure, recursively summarizing the document with the GPT API to condense it into a manageable size for a single prompt.

Another challenge was response streaming. During testing, I noticed that waiting for the API to return full responses could lead to confusion about whether the tool was functioning properly. To improve user experience, I implemented a streaming feature that delivers responses word by word as they are received from the API. This significantly enhances user satisfaction and minimizes frustration. I recommend that any applications generating lengthy responses with LLMs consider incorporating streaming, despite the potential difficulties in content moderation.

Chapter 5: Conclusion and Future Directions

Although this project is a simple front-end application created over a weekend, it has the potential to evolve into a more sophisticated product. Future adaptations could target specific issues beyond product documentation, develop a proxy backend with customized prompts, or connect to a vector database for referencing company documents while generating responses.

Source code repo & related readings