The Decline of Dimensional Data Modeling in Modern Data Practices

Written on

Chapter 1: A New Era in Data Engineering

My initial experience in Silicon Valley back in 2019 came with an unexpected revelation: I found no dimensional data marts. Previously, I was accustomed to the practices of linking facts to dimensions, articulating the principles of normalization, and advocating for data modeling best practices. I considered myself well-versed in slowly-changing dimensions and their application. Dimensional data modeling, a concept popularized by Ralph Kimball in his 1996 publication, serves to structure data within a data warehouse. Although this methodology has its merits, I assert it primarily exists to optimize computing processes, categorize data by themes, and enhance storage efficiency. The foundational motives behind dimensional modeling have evolved significantly; thus, it's pertinent to revisit its origins and assess their relevance in today's landscape.

Source: unsplash.com

In the early computing era, data storage was exorbitantly priced—around $90,000 back in 1985. Given these costs, it was essential to organize data in a manner that minimized redundant storage. This led to the use of pointers through database keys, linking unique identifiers to multiple records containing the same information. The concept of database normalization emerged to illustrate how much a database could be streamlined and optimized for storage. Instead of repeatedly storing lengthy descriptive values, we could store them once and connect them to the relevant records.

Computational efficiency was equally crucial during this time, with the most advanced computers achieving 1.9 gigaflops at a staggering cost of $32 million in 1985. For context, today’s leading computers can perform over 400 petaflops—around 20,000 times more. In the initial stages of database development, reducing operational frequency and complexity could save companies vast sums. For instance, rather than analyzing lengthy string values, a single integer could represent unique instances, allowing for more efficient relationships.

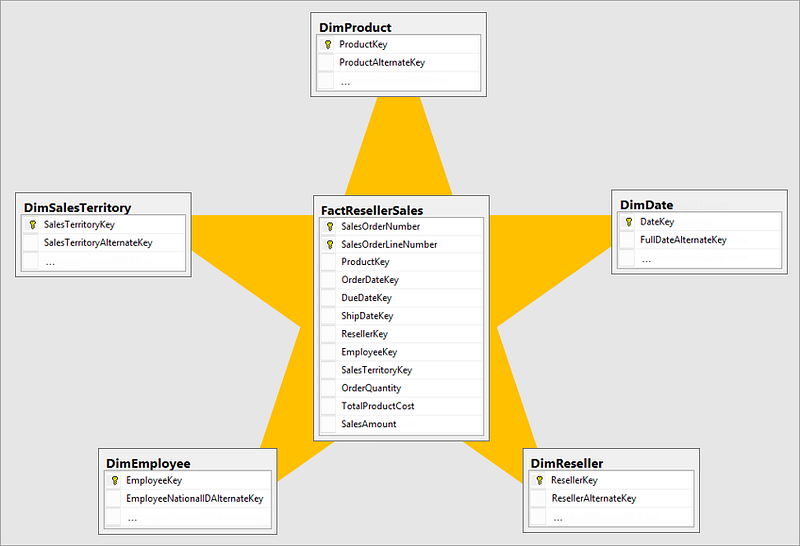

To achieve these efficiencies, data was structured into topic-focused models, with the star schema being a prominent example.

The star schema's advantage lay in its fact table at the center, where indexed values were easily accessible. Conversely, the more complex values were stored in dimension tables, enabling selective retrieval and reducing processing costs. If new dimensions related to the fact table emerged, additional dimension tables would need to be created, relationships enforced, and normalization maintained. Successful dimensional modeling involved deconstructing source data tables, distributing them across multiple tables, and, if executed correctly, allowing for reassembly back into the original table if needed.

Why is dimensional modeling becoming obsolete?

Storage Costs Have Plummeted

The era of database normalization appears outdated. Currently, the cost of storing 1GB of AWS Cloud data is merely 2 cents per month. The advantages of breaking down extensive tables into star or snowflake schemas yield diminishing returns, as storage costs are negligible. This trend is applicable to tables of all sizes and organizations.

Affordability of Computational Power

While historical savings from optimizing data models could amount to hundreds of thousands, if not millions, of dollars, such justifications no longer hold. The speed of operations is now exceptionally swift, and with cloud computing, scaling resources for intensive queries is straightforward.

Complexity for End-Users

For the average data user, particularly businesses relying on data insights, dimensional models can be challenging to grasp. Data engineers may find these models intuitive, but users often prefer familiar formats like spreadsheets. It is considerably easier to teach basic SQL commands than to explain the intricacies of dimensional models and the rationale behind their structure.

Maintenance Challenges

Integrating new columns from source systems into data models can be labor-intensive, whereas new columns in source systems require no such resources. Although modern data modeling tools have simplified integration, failing to adjust the data model for new columns often renders them unusable for end-users.

What Lies Ahead for Data Design?

The advantages of dimensional data modeling are waning. Just as cubes rose to prominence and subsequently diminished, star schemas have had their moment. Moving forward, data lakes and lakehouses are poised to take center stage. Data lakes offer enhanced usability for businesses, require minimal maintenance, and do not demand additional engineering resources for setup.

The primary advantage of data lakes is their user-friendliness for business operations. Analysts and Business Intelligence Engineers no longer need to interpret complex data models to extract value; instead, data can flow seamlessly from source to end-user. This allows analysts to concentrate on more significant challenges, such as developing predictive modeling pipelines. The success of data lakes highlights that the marginal benefits of reducing compute and storage are no longer relevant, and the focus has shifted back to enhancing usability, presenting a significant improvement to the data ecosystem. Maintenance costs associated with dimensional models can now be redirected towards creating rapid value for businesses.

As for dimensional modeling, it has had its place in history, but much like the cube, it may soon fade into obscurity. While many companies remain dedicated to dimensional modeling, the skills associated with it are unlikely to vanish entirely for years. However, as new teams evaluate the costs of data lakes versus dimensional models, we can expect to see a decline in the latter's prevalence.