Artificial Intelligence in Medicine: Lessons from Cassandra

Written on

Chapter 1: The Intersection of AI and Medicine

In this discussion, I will delve into a pioneering study at the forefront of contemporary medicine, utilizing an artificial intelligence (AI) framework to enhance patient care. But to frame this conversation, let's journey back to the late Bronze Age to a city on the shores of present-day Turkey. Despite its imposing walls, the city fell to the Achaeans, who landed and ultimately laid waste to it after a prolonged siege.

The demise of Troy, as narrated in the Iliad and the Aeneid, was foretold by a woman named Cassandra, the daughter of King Priam and the city's priestess.

Cassandra received the divine gift of prophecy from Apollo, but after spurning him, he cursed her so that no one would ever believe her warnings. When her brother Paris traveled to Sparta to abduct Helen, she forewarned him that this would lead to the city's downfall, but he disregarded her counsel. The rest, as they say, is history.

So why recount the story of Cassandra while discussing AI in medicine? Because AI faces a significant challenge akin to her plight.

The evolution of AI, particularly the Machine Learning branch within medicine, has been dominated by a race for accuracy. The advent of electronic health records has enabled the accumulation of vast amounts of data, far exceeding what was previously possible. This data can be analyzed by various algorithms to predict numerous outcomes, such as whether a patient will require intensive care, whether a gastrointestinal bleed will necessitate intervention, or if a patient is likely to die within a year.

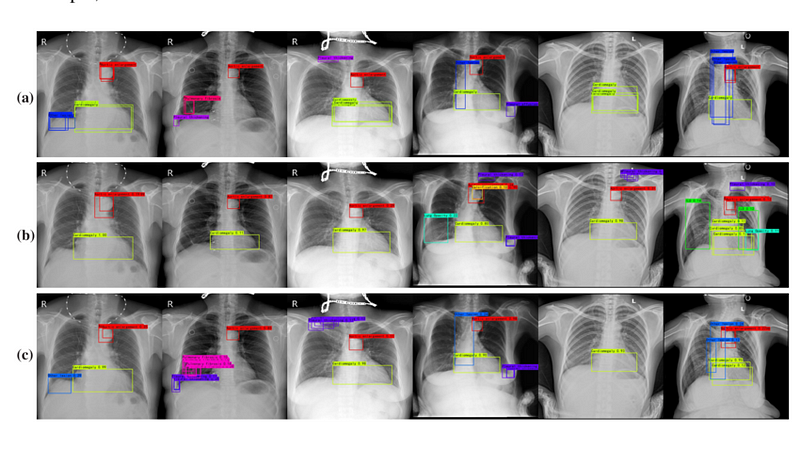

Recent research has shown how advancements in algorithms and data collection have resulted in increasingly precise predictions. In some scenarios, machine learning models have achieved near-perfect accuracy, comparable to Cassandra's foresight, such as in interpreting chest X-rays for pneumonia.

However, as Cassandra's tale illustrates, perfect predictions are futile if they go unheeded. This encapsulates the current dilemma in AI and medicine. Many are fixated on achieving high accuracy, overlooking the fact that it is merely a prerequisite for an AI model to be beneficial. It must not only excel in accuracy but also lead to improved patient outcomes.

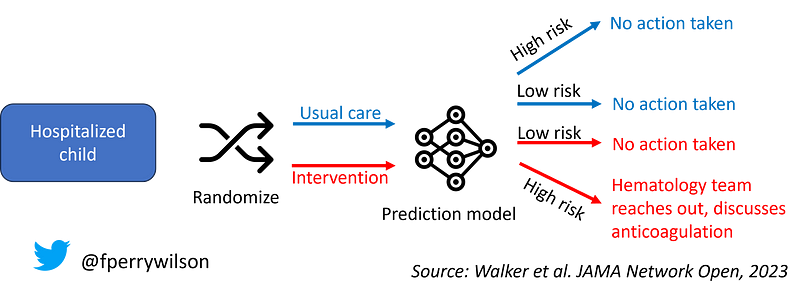

To assess whether an AI model can genuinely assist patients, researchers should evaluate it akin to a new medication through randomized trials. This is precisely what a team led by Shannon Walker at Vanderbilt University accomplished, as detailed in their publication in JAMA Network Open.

The model in focus predicted the risk of venous thromboembolism (blood clots) in hospitalized pediatric patients. It utilized numerous data points, including past blood clot incidents, cancer history, central line presence, and various lab results, achieving an impressive AUC of 0.90 — a testament to its accuracy.

Yet, once again, accuracy is merely the starting point. The researchers deployed the model within the active health record system and recorded the outcomes. For half of the children, no one saw the predictions, while those in the intervention group were alerted if their risk of clotting exceeded 2.5%. The hematology team would subsequently reach out to the primary team to discuss potential preventive anticoagulation.

This elegant methodology seeks to address the critical question regarding AI models: does utilizing a model improve outcomes when compared to not using one?

Let’s start with the foundational requirement of accuracy. During the trial, predictions were largely accurate. Out of 135 children who developed blood clots, 121 were correctly identified by the model, translating to roughly 90% accuracy. However, the model also flagged about 10% of children who did not experience clots, which is understandable given the 2.5% risk threshold for alerts.

Logically, one would expect that children identified for intervention would fare better, as Cassandra was issuing her warnings. Yet surprisingly, the rates of blood clots were similar between both groups.

Why was this so? For a warning to be effective, it must result in changes to management. While children in the intervention group were indeed more likely to receive anticoagulation, the increase was minimal. Factors like physician preferences, timing of discharge, and the presence of active bleeding contributed to this outcome.

Examining the 77 children in the intervention group who developed clots reveals valuable insights. Six of them did not meet the 2.5% risk threshold, indicating that the model failed in these instances. Of the remaining 71, only 16 received recommendations for anticoagulation. A significant factor was that many high-risk cases occurred over the weekend when the study team could not contact treatment teams, accounting for nearly 40% of missed cases. The others had contraindications for anticoagulation.

Moreover, of the 16 who were advised to start anticoagulation, only 7 followed through with the recommendation. This highlights the critical gap between accurate predictions and the actual ability to improve patient outcomes. A prediction is worthless if it is incorrect, but it is equally meaningless if the right people are not informed, if they cannot act on the information, or if they choose not to act.

This is the chasm that AI models must bridge moving forward. Therefore, the next time a company boasts about the accuracy of their AI model, consider asking whether accuracy is the most crucial metric. If they insist it is, remind them of Cassandra's tale.

Chapter 2: Video Insights on AI in Medicine

This video delves into the significant challenges faced by AI in medicine, discussing how these technologies can sometimes create more questions than answers.

This video explores the Cassandra AI system, which leverages real-world data to enhance medical predictions and outcomes.