Exploring Ethical Decision-Making in Artificial Intelligence

Written on

Chapter 1: The Ethical Dimensions of AI

The rapid advancements in artificial intelligence have led to machines being tasked with not only simple functions but also critical decision-making roles. This raises a crucial question: "What does it take to train AI systems to make ethically sound choices?" Researchers are actively seeking answers to this pressing inquiry.

This paragraph will result in an indented block of text, typically used for quoting other text.

Section 1.1: Challenges in AI Ethics

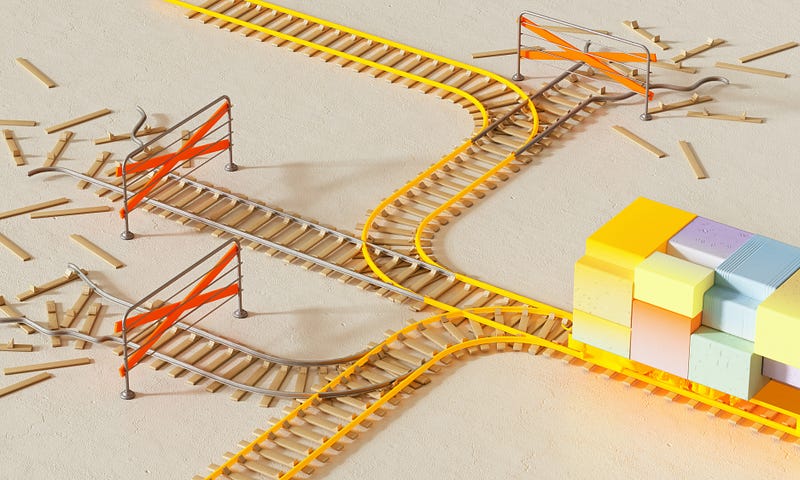

There are significant challenges associated with instilling ethics in machines. These challenges include an AI's ability to grasp moral principles accurately, interpreting real-world scenarios through visual and textual inputs, exercising sound reasoning to forecast outcomes of different actions in various contexts, and making ethically justified decisions amidst conflicting values.

While the task of making universally accepted ethical decisions may seem straightforward, the reality is far more intricate when applied to diverse real-life situations. For instance, while “assisting a friend” is generally viewed positively, the act of “helping a friend disseminate misinformation” is ethically questionable.

Subsection 1.1.1: DELPHI's Contribution

Liwei Jiang from Washington University, alongside her colleagues at the Allen Institute for Artificial Intelligence, developed a detailed database of moral dilemmas to train their deep learning algorithm. This initiative led to the creation of DELPHI, a machine attuned to human values. Remarkably, DELPHI has successfully aligned its responses with human virtues in over 90% of the dilemmas it encountered, paving the way for further research.

The team utilized examples from nearly 2 million diverse scenarios to educate the AI system on distinguishing right from wrong. They plan to share their "Common Sense Standards Database" to facilitate future studies. Additionally, they evaluated DELPHI's judgments against those generated by another AI known as GPT-3, which is designed for natural language processing. DELPHI's performance surpassed that of its counterparts in most challenging situations.

Chapter 2: Limitations of AI in Legal Contexts

The first video, "Does AI Make Better Decisions than Humans? Thinking Ethics of AI," explores the comparative capabilities of AI and humans in ethical decision-making.

Researchers have identified some limitations within the DELPHI system. Notably, AI may struggle to navigate legal conditions that do not apply in exceptional circumstances. For instance, although running a red light is deemed illegal, there is ambiguity regarding actions in urgent or extraordinary situations. This uncertainty raises questions about how machines will render value judgments in complex scenarios.

Section 2.1: Human Rights and AI

In testing DELPHI against concepts outlined in the Universal Declaration of Human Rights, researchers found that the system made expected judgments regarding human rights actions.

The second video, "Artificial Intelligence: Ethical Considerations," delves into the ethical implications of AI technologies and their impact on society.

While numerous aspects of AI ethics require further development, this research represents a vital step toward embedding ethical values into AI systems—an area that has often been overlooked. Given the growing interaction between humans and AI, prioritizing the integration of ethical reasoning into these systems is essential for future advancements.